Top 14 Skills Required for an AI Engineer and How to Assess Them

Hiring strong AI engineers has never been harder. Every candidate claims hands-on expertise, yet few can prove they’ve shipped scalable AI systems that actually work in production.

And that's not the only reason that explains the difficulty of hiring a good AI engineer. According to Aura Blog, job postings for AI-related roles more than doubled between January and April 2025 (jumping from 66,000 to nearly 139,000). That growth explains why your hiring process feels more competitive and far less predictable.

This guide breaks down exactly how to evaluate real AI engineering capability.

In this article, you’ll see what separates surface-level skill from production readiness and how to assess it without wasting cycles. Plus, you'll learn how to find the best talent for your company's needs.

First, let’s clarify what an AI engineer actually does in real projects.

Pro tip: Need proven tech hires fast? Alpha Apex Group fills roles in 43 days on average, achieves an 80% placement success rate, and backs every hire with a 90-day replacement guarantee. Reach out today and secure the talent your team actually needs.

What Is an AI Engineer?

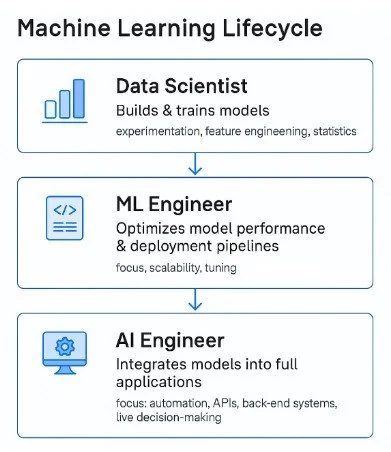

An AI engineer is a software professional who builds and deploys AI applications that move from prototypes to real, production-grade systems. The role brings coding discipline and applied research together, so model development actually translates into delivery.

And that demand for hands-on experience shows up clearly in hiring trends.

365 Data Science reports that most 2025 AI engineering roles require 2-6 years of experience, with 4-6 years appearing in about 12% of job postings. That tells you companies expect engineers who can handle end-to-end system delivery rather than just experimentation.

You can check out this YouTube video to learn more about this position:

What Does an AI Engineer Do?

An AI engineer designs, builds, and maintains intelligent systems that automate reasoning and decision-making. In practice, that means combining machine learning models, APIs, and scalable back-end infrastructure to create production-ready systems.

A Data Scientist might build the model, while an ML Engineer tunes performance. But you need AI engineers to translate those outputs into something that works under live traffic and variable inputs.

They manage deployment pipelines, monitoring, and retraining cycles while keeping latency, cost, and data integrity in check. Their work converts research into products that customers actually use.

Why Is the AI Engineer Role Growing?

The AI engineer role is expanding because applied AI engineering has become a competitive advantage for almost every industry. In fact, a report by PwC shows that sectors most exposed to AI see about 3x higher growth in revenue per worker.

Finance, healthcare, retail, and logistics are leading that trend as they embed AI into existing operations. And what once lived in research labs is now part of active product stacks, from LLM-based chat systems to real-time anomaly detection.

Today, you’re no longer hiring someone to experiment with models but to ship scalable, integrated solutions. So, up next, let’s look at where these career paths branch.

Pro tip: Curious how IT and tech hiring trends are shifting this year? Check out this full 2025 recruitment guide to see what’s changing next.

Types of Careers for AI Engineers

AI engineers don’t work in isolation. Their AI engineering skills now extend across departments where automation and data-driven decisions shape results. Here are the areas where you’ll see these roles making the biggest business impact:

Marketing: Using predictive analytics to personalize outreach and measure campaign ROI.

Sales: Applying AI-powered tools to forecast demand and qualify leads.

Customer service: Deploying chatbots and virtual assistants to improve response times. They might even create a customer service automation process.

Finance: Automating fraud detection and real-time risk modeling.

Research and development: Accelerating innovation through model experimentation and simulation.

Now with that out of the way, let’s look at the essential technical skills your AI engineer will need to have.

Core Technical Skills Every AI Engineer Must Have

Strong AI engineer skills go far beyond knowing how to train a model. You need to look for engineers who can translate code into reliable systems that run at scale. So, these are the technical foundations that define whether a candidate can move from concept to deployment.

1. Programming Proficiency

Coding is the backbone of all AI projects. You need engineers who write production-ready code instead of one-off notebooks. The core languages are Python, R, Java, C++, and JavaScript, supported by libraries like TensorFlow, PyTorch, scikit-learn, Keras, and OpenCV.

Python remains dominant because it balances readability with a massive ecosystem of AI tools and frameworks. But the thing is that language proficiency signals syntax knowledge, and it reflects engineering maturity and debugging discipline.

That’s why hiring trends now mirror this skill depth across programming languages. According to itransition:

45.7% of recruiters are looking to hire Python developers

41.5% are searching for JavaScript specialists

39.5% are seeking Java experts

That shows you how valuable multi-language fluency has become in production teams.

The best assessment methods are code reviews, GitHub analysis, or short FastAPI projects that simulate real delivery work.

2. Mathematics and Statistics

Mathematical fluency separates engineers who tune models by intuition from those who optimize them with intent. Core areas include linear algebra, calculus, probability, and statistical modeling.

These skills form the base of neural networks and model evaluation. When candidates understand gradient descent, regularization, and optimization, they can troubleshoot performance instead of guessing.

In interviews, you can validate these fundamentals through short analytical tasks. For instance, identifying bias-variance trade-offs or improving a regression model. Strong results show theory recall and the ability to reason through model behavior under different conditions.

3. Machine Learning and Deep Learning

This is where applied AI engineering meets real impact. Candidates should understand:

Supervised learning

Unsupervised learning

Reinforcement learning methods

Architectures such as CNNs, RNNs, and transformers

The recent rise of large language models and generative AI means you now need engineers who can integrate pre-trained systems.

To support that kind of integration, data engineering competence has become equally critical. 365 Data Science reports that data engineering skills appear in 11.6% of AI engineer postings for pipelines and 9.1% for big data. That correlation shows how model design and data infrastructure now overlap in modern hiring.

Source: 365 Data Science

You can test this competency by asking candidates to design an ML pipeline or implement model evaluation metrics. Another good test is to have them explain how they balance accuracy with computational cost.

4. Data Handling and Engineering

Every AI initiative depends on the quality of data pipelines. Candidates should handle:

Data preprocessing

Feature engineering

Structured storage through SQL and NoSQL databases

Experience with Spark, Hadoop, and visualization tools like Matplotlib or Tableau is now standard.

A practical test could include cleaning messy JSON data or restructuring logs into a usable dataset. This reveals how they handle unstructured inputs and debug data flows. And it’s something static questions can’t uncover. Strong performance indicates they can handle end-to-end data operations in live production environments.

5. Model Deployment and DevOps

Model quality means little without stability in deployment. You should expect fluency with Docker, Kubernetes, and cloud platforms such as AWS, Azure, and Google Cloud. CI/CD pipelines, version control, and monitoring tools define whether models evolve reliably after release.

Basically, cloud fluency has become a core production skill, and the data shows it.

365 Data Science reports that cloud skills appear in about 33% of AI engineer postings for AWS, 26% for Azure, and 4% for GCP. That pattern tells you production reliability now depends on cloud fluency as much as modeling skill.

Source: 365 Data Science

The best evaluation task is a mini deployment challenge. For instance, containerizing an inference service and setting up automated validation triggers.

6. APIs and Integration

Integration bridges AI capability with business usability. Engineers who understand REST APIs, FastAPI, Flask, LangChain, and vector databases can connect machine learning algorithms with real applications. Their skill in designing efficient application program interfaces determines how smoothly models serve requests under load.

That depth of integration expertise is commercially valuable as well. According to Refonte Learning, engineers who manage cloud-based AI infrastructure earn 10-15% higher salaries. This shows how integration drives business value.

To assess this skill, assign a small integration exercise. This includes connecting a trained model to an API endpoint or embedding a natural language processing module into a product workflow. The results will reveal how well candidates design for scalability and maintainability.

Next, let’s look at applied skills beyond the technical core.

Pro tip: Want to see which agencies deliver results for fast-scaling tech teams? Check out this list of top remote recruiters to find out who leads the pack.

Key Applied & Secondary Skills for AI Engineers

Apart from core coding and modeling ability, great AI engineers show range. These applied skills shape how well solutions scale, integrate, and communicate value across teams. Here are the areas that separate good contributors from production-level engineers.

7. Cloud and Big Data Proficiency

Cloud and data fluency determine how efficiently models scale. Engineers who know AWS S3, Google Cloud ML Engine, and Azure AI can deploy workloads where they perform best. Also, handling distributed systems like Spark SQL and Flink shows the candidate can move beyond prototypes into operational AI.

To assess this, you can set a short deployment challenge.

For instance, ask a candidate to push a model to the cloud and explain their trade-offs between cost and latency. Their answer tells you how they think about cloud computing as part of model lifecycle design.

8. NLP and Computer Vision

Text and image intelligence power most artificial intelligence applications today. Engineers who use NLP frameworks like Hugging Face, spaCy, or OpenAI API handle text-based intelligence effectively. Those who apply computer vision tools such as OpenCV or YOLO tackle visual perception challenges across domains.

The key is to see if candidates can move from using off-the-shelf libraries to customizing them. A practical test can show whether they understand data flow, fine-tuning, and deployment trade-offs. This includes building a short sentiment analysis or image classification pipeline.

9. Visualization and Communication

Numbers alone rarely convince stakeholders. The best engineers explain what the data means instead of just what it shows. Tools like Matplotlib, Seaborn, Power BI, and Tableau help them visualize outcomes and translate model training performance into clear, actionable insights.

You can test this by asking a candidate to present model results to a non-technical audience. Look for structure, clarity, and whether they tie findings to business outcomes rather than metrics alone. This is where communication becomes a measurable part of technical delivery.

10. Ethics, Bias, and Responsible AI

Modern AI teams must balance innovation with accountability. Candidates who understand fairness, transparency, and bias mitigation can prevent small modeling decisions from turning into large-scale operational risks.

So, try to ask scenario-based questions to see how they reason through ethical dilemmas. One of the AI Engineer's responsibilities is to explain bias detection using SHAP or LIME. Their responses can reveal awareness and judgment under pressure, which is important in real-world AI deployment.

11. Collaboration and Adaptability

No AI system succeeds in isolation. So, engineers must work with data scientists, MLOps specialists, and product teams to align outcomes with business needs. Collaboration also means shared accountability across the full development lifecycle.

A behavioral interview works well here.

You should ask about a time they adapted to a new tool or framework under tight deadlines. Their story tells you whether they value speed, precision, and teamwork equally. In fast-moving environments shaped by technological advances, adaptability becomes a competitive advantage.

Soft Skills That Distinguish Top AI Engineers

Technical skill gets an engineer hired. Soft skill keeps them effective in fast-changing teams. So, these are the human factors that usually determine whether an AI project ships on time or stalls in communication and decision gaps.

12. Problem-Solving and Critical Thinking

AI engineering moves fast, and decisions rarely have one clear answer. Strong engineers approach every issue with structured reasoning. This includes everything from debugging model drift to deciding between a rule-based or ML-driven solution. They know when to experiment and when to optimize for delivery.

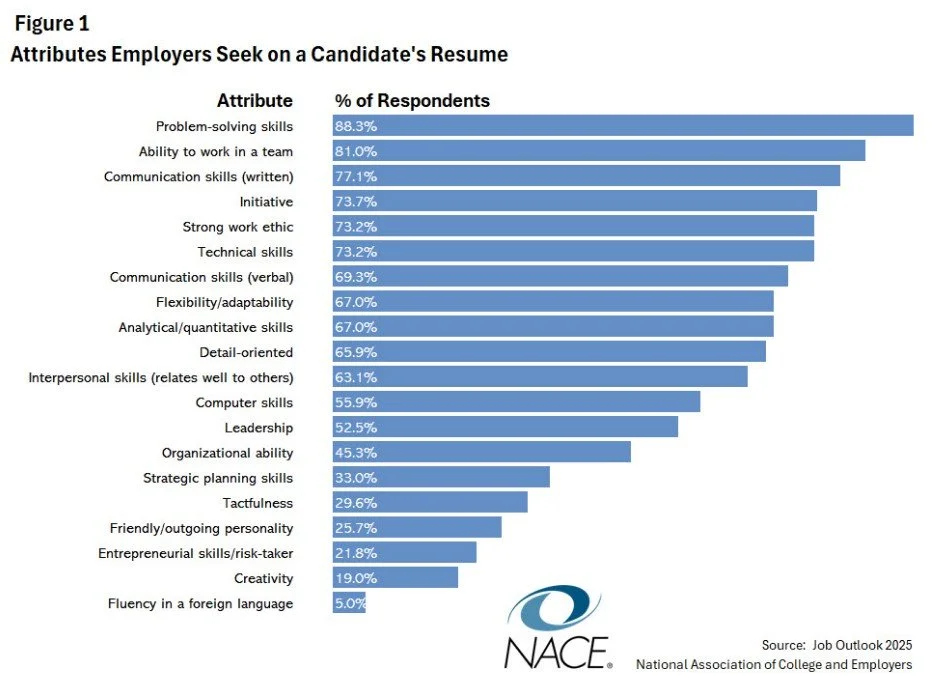

That mindset isn’t optional anymore. According to the National Association of Colleges and Employers, 88.3% of employers now prioritize problem-solving when evaluating candidates. And a separate report found that 57% of respondents view critical thinking as a core requirement for workplace success.

Source: National Association of Colleges and Employers

Together, these numbers show that the best engineers also think through trade-offs that define long-term system value.

13. Communication and Business Alignment

There are plenty of AI Engineer responsibilities. One of them is the ability to explain complex models in clear, outcome-focused language separates technically strong engineers from those who lead impact. A candidate who can translate F1 scores or inference latency into business terms earns trust across leadership and product teams.

So, a simple assessment is to ask for a short presentation or write-up explaining the ROI of a system they’ve built. That kind of clarity builds understanding and directly shapes team efficiency and alignment.

According to Pumble research, 64% of business leaders and 55% of knowledge workers say effective communication improves team productivity. That insight reinforces how communication drives both collaboration and measurable output.

14. Continuous Learning

AI doesn’t stand still, and neither can your team. Top engineers stay current through courses, research papers, Kaggle competitions, or internal L&D programs. And asking about the last new framework or paper they studied usually reveals how self-directed their growth really is.

The value here is clear. Apart from personal, that habit of constant learning is also organizationally valuable.

In fact, 86% of employees consider access to learning opportunities important. Continuous learners adapt faster to shifting tools and standards. That's exactly the kind of resilience high-performing AI teams need.

Next, let’s break down how to assess these skills effectively.

How to Assess AI Engineer Skills Effectively

Hiring AI engineers means that you need to prove their capability. Traditional tests and interviews usually miss what really matters. And that's the ability to think, build, and deliver under realistic production constraints.

So, these are the methods that help you assess that skill with precision.

Resume and Portfolio Review

Start by checking for clarity rather than claims. Strong candidates can describe what they built, how they built it, and why it mattered.

So, look for language like “built X using Y to solve Z,” not vague mentions of “AI projects” or “data pipelines.”

Engineers who quantify results such as model accuracy, latency gains, or deployment uptime show ownership. That kind of measurable detail typically signals engineers who can scale solutions beyond prototypes.

GitHub and Open-Source Contributions

A GitHub profile is more revealing than a resume. Active repositories with consistent commits and clear documentation prove hands-on coding maturity.

But here’s the thing: empty repos or copied coursework are red flags. They indicate surface-level familiarity rather than real problem-solving ability.

When you see structured branches, pull requests, and meaningful issue tracking, that’s evidence of engineering discipline.

Technical Skill Tests

Skill tests should simulate production work rather than classroom puzzles. So, try to use short, structured challenges (around 40 minutes) that reflect real AI workflows.

For example, you might ask a candidate to:

Clean a messy API response.

Design a small ML pipeline.

Or build a simple FastAPI endpoint.

These tasks show not only syntax knowledge but also how the engineer thinks under time constraints.

Pro tip: To make this more effective, focus on how they document and test their code. That’s usually where you’ll see whether they think like maintainers or one-off coders.

Practical Project Assessments

This is where depth matters most. You can:

Assign a short project that mirrors your production setup, something that tests design quality, scalability, and deployment logic.

Ask the candidate to submit readable, well-structured code under a realistic deadline.

Then, review how they handle data preprocessing, evaluate models, and trade off between speed and accuracy.

Tools like Adaface, Fonzi AI, or HackerRank with custom datasets can help you automate this process while keeping it practical.

Interview Frameworks

Interviews should confirm how candidates think instead of how well they memorize. The first round should be a technical deep-dive into their ML pipeline, such as data flow, preprocessing, and optimization trade-offs.

Next, move into system design. Ask them to outline a production-grade AI service, describing how they’d handle scaling and monitoring.

Finally, include a behavioral round focused on collaboration, communication, and continuous learning. That mix helps you evaluate both technical judgment and team fit.

Continuous Evaluation Post-Hire

Assessment doesn’t end after onboarding. So, try to track measurable metrics like deployment speed, model uptime, and innovation output over time. These show whether your hiring process translates into sustained impact.

And that matters because early performance reviews usually expose training gaps or mentoring opportunities you can address proactively.

We also advise you to encourage internal learning programs and pair coding sessions to keep that momentum.

Pro tip: Wondering what’s making tech hiring harder this year? Check out a complete breakdown of recruitment challenges to see what’s really slowing teams down.

Salary and Market Demand

AI engineering has become one of the fastest-moving career paths in tech. Engineers usually progress from junior roles into senior, lead, or specialized tracks like AI architect or MLOps engineer. Each step brings broader system ownership, deeper model accountability, and higher strategic impact.

Now, here’s what’s driving this shift. According to Veritone, there were 35,445 AI-related positions in Q1 2025, 25% more than in 2024. The median salary for those roles reached $156,998, which reflects how strong demand translates directly into higher compensation.

And that trend stays consistent across platforms. Today, Glassdoor reports that AI engineers earn about $138,000 per year in median total pay. That level of compensation mirrors how these roles drive both innovation and cost efficiency.

Tackle the High AI Engineer Demand with Alpha Apex Group

Hiring the right AI engineer takes more than reviewing résumés. It requires precision, speed, and insight into both technology and talent.

That’s exactly what Alpha Apex Group delivers.

With a high placement success rate, we can connect you with professionals who can move AI projects from concept to production.

Our AI recruitment team understands modern demands and matches candidates with the technical depth and business acumen your organization needs. This includes everything from machine learning and data engineering to MLOps and model deployment. Each search is driven by real data, structured assessments, and cultural alignment.

After all, every engineer you hire should drive measurable outcomes rather than just write good code. Alpha Apex Group helps you find those people quickly and confidently.

Its 80% success rate, 43-day average time to placement, and 90-day replacement guarantee show that great hiring can be fast and reliable.

So, if you’re ready to strengthen your AI or tech leadership bench, contact Alpha Apex Group and start building the team that drives real outcomes.